Rendering a fractal flame takes quite a bit of memory so it’s important to take time to carefully consider how much memory you are using relative to how much is available on your system. As a general rule, CPUs have more memory than GPUs so memory will be less of a concern on the former.

As mentioned in the algorithm section, rendering requires three main data buffers: the histogram, density filtering buffer and final output image. The size of these buffers is governed by the dimensions of the image and the supersample value.

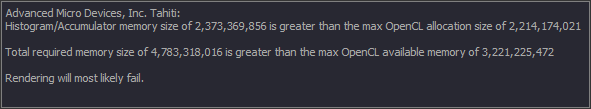

In most cases, all of the data will fit within available memory. However, with larger images it might not fit, especially when using the GPU. In addition to generally having less memory, GPUs have a limit on how large a single contiguous allocation can be. If the memory needs of the render violate either of these thresholds, you will see this warning in the Final Render Dialog like so:

In this case, you can use strips to reduce the amount of memory used. This works by dividing the histogram and density filtering buffer into the number of horizontal strips specified. The final image buffer is not divided into strips since it must exist contiguously in its entirety in memory.

The downside of using strips is that the number of iterations done is scaled up by the number of strips. So if your original image would take 1M iterations and you split it into 2 strips, then the total iterations needed would be 2M. The time spent on these additional iterations is somewhat mitigated because the iterations done on each strip are more likely to fall outside the bounds of the histogram because it’s smaller than it would have been without the strips. Thus, the time spent computing the points is still the same, but the time spent accumulating them to the histogram will be less. Regardless, rendering with strips will take longer than without. However with limited memory, there is no other choice.

Memory concerns are higher on the GPU, which presents another interesting tradeoff. GPUs are used to speed up the rendering process, however their limited memory can mitigate the performance gains because it will force the need to use strips. Theoretically, there would be a point where the number of strips becomes so great that using strips on the GPU would end up being slower than not using them on the CPU. In practice, this is unlikely to happen. So even when using strips, a GPU will usually be faster than a CPU.

For instructions on how to use strips, see the Final Render dialog for Fractorium, and see EmberRender for the command line. Note that strips are only supported for still image renders and not for animations. This is usually not a problem since the memory required for the largest video resolutions such as 4K and 5K will most likely fit into memory.